The Shocking Future of Neuromorphic Computing! Computers that mimic the human brain? What's next for the AI revolution?

No longer science fiction! Neuromorphic computing, which embodies the working principles of the human brain, is breaking the limits of artificial intelligence and heralding a next-generation revolution. Unimaginable speed, overwhelming efficiency, and intelligence never before experienced! Check out how brain-like computers will change our future right now!

index

- 1. Introduction: Brain-like computers, a revolution that will change the future of artificial intelligence

- 2. Blueprint of the human brain: Neurons and synapses, turning dream computing into reality

- 3. How Neuromorphic Chips Work: The Magic of Parallel Processing and Event-Driven Computing

- 4. Crucial differences from existing computers: Beyond the von Neumann architecture

- 5. Current Development Status and Key Players: Who is Creating the Future?

- 6. Why Neuromorphic Computing Matters: Disruptive Potential

- 7. Mountains to overcome and challenges ahead: The road ahead until commercialization

- 8. Conclusion: The era of computers that think like the brain is coming

- 9. Summary

1. Introduction: Brain-like computers, a revolution that will change the future of artificial intelligence

The computers we use every day are excellent at handling complex tasks, but they fall short of the incredible efficiency and adaptability of the human brain. To overcome these limitations and open new horizons in artificial intelligence, scientists are researching innovative computing paradigms that mimic the way the human brain works. Neuromorphic Computingno see.

This strange yet fascinating technology has the potential to break the mold of conventional computers and shake up the landscape of artificial intelligence, robots, and future technologies we imagine. Let’s take a deep dive into the world of neuromorphic computing, a computer that resembles the human brain.

2. Blueprint of the human brain: Neurons and synapses, turning dream computing into reality

The core idea of neuromorphic computing is to use the fundamental building blocks of the human brain: Neuronclass SynapseIt is to implement the connection method and information processing principle in computer hardware. Numerous neurons in the brain perform complex cognitive functions by exchanging information with each other through electrical and chemical signals. The strength and connection pattern of these connection points, synapses, play a critical role in forming the mechanism of learning and memory. Neuromorphic computing aims to build a new computing architecture that efficiently processes information by interconnecting numerous processing units (artificial neurons) and controlling the connection strength (artificial synapses) based on this biological inspiration.

3. How Neuromorphic Chips Work: The Magic of Parallel Processing and Event-Driven Computing

Unlike conventional computers that execute commands sequentially by separating the central processing unit (CPU) and memory, neuromorphic chips Parallel ProcessingIt is based on the neural network of the brain, which allows a large number of artificial neurons to process information simultaneously and perform specific tasks much faster. In addition, neuromorphic chips are like the neural network of the brain. Event-DrivenIt works like this. Instead of all neurons being active all the time and consuming energy, they only perform the necessary computations when a specific event (signal) occurs, maximizing energy efficiency. This way of working suggests the possibility of processing complex cognitive tasks with much lower power than conventional computers.

4. Crucial differences from existing computers: Beyond the von Neumann architecture

Neuromorphic computing is what most computers follow today. Von Neumann ArchitectureIt has a fundamental difference from the von Neumann architecture. The central processing unit (CPU) and memory are separated, and data is exchanged and calculations are processed sequentially. This is suitable for high-performance calculations, but it shows limitations such as bottlenecks and high power consumption in artificial intelligence tasks where large-scale parallel processing and energy efficiency are important. On the other hand, neuromorphic computing integrates calculation and memory functions into basic units called neurons, and attempts to overcome these limitations through parallel processing and event-based operation. This means that it has the potential to perform much better than conventional computers, especially in real-time data processing, pattern recognition, and autonomous learning.

5. Current Development Status and Key Players: Who is Creating the Future?

Neuromorphic computing is still in its early stages of research and development, but there is already active research and investment taking place worldwide. IBM TrueNorth The chip integrates millions of artificial neurons and has shown remarkable energy efficiency for specific tasks such as image recognition. Intel also Loihi The chip is being developed and used for research on various artificial intelligence applications, and a unique architecture based on a spiking neural network (SNN) is being introduced. SpiNNaker The project is contributing to brain science research and artificial intelligence development by building a neuromorphic system specialized in large-scale parallel processing. In addition, numerous universities, research institutes, and startups are constantly working to commercialize neuromorphic computing, and it is attracting attention as one of the important directions of future artificial intelligence and computing technology.

6. Why Neuromorphic Computing Matters: Disruptive Potential

Neuromorphic computing transcends the limitations of conventional computers and offers revolutionary possibilities:

- High-efficiency artificial intelligence: It can process complex tasks such as pattern recognition, image/voice processing, and natural language understanding in real time with much less energy than the human brain, enabling powerful artificial intelligence functions in various environments such as mobile devices, wearable devices, and IoT devices.

- Real-time data processing: It is advantageous for quickly analyzing large-scale data such as sensor data streams and real-time image analysis and making decisions, allowing for innovative solutions in fields such as autonomous driving, smart cities, and industrial automation.

- Self-learning and adaptive: By mimicking the brain's learning mechanisms, we can build smarter and more flexible artificial intelligence systems by improving their ability to adapt and learn on their own from new data.

- A new computing paradigm: It can open a new computing paradigm where hardware itself performs intelligent information processing functions, moving away from existing software-centric computing, and bring about fundamental changes in future technological development.

Neuromorphic computing is still a technology in its early stages, but it is expected to be used in a variety of innovative ways in the future. Here are some scenarios of how it can be used and in what form it will come to us, based on current research and development directions.

1. Ultra-efficient artificial intelligence device:

- form: It could come in the form of small neuromorphic chips embedded in smartphones, wearable devices, and IoT sensors.

- use: Current AI functions (such as voice recognition and image recognition) can be processed in real time with much less power, dramatically increasing battery life, and more complex AI calculations can be performed on the device itself without a cloud connection. For example, this could take the form of smart glasses analyzing the surrounding environment in real time and providing personalized information, or hearing aids filtering out ambient noise and amplifying only specific voices.

2. Intelligent Robots and Autonomous Systems:

- form: It can be integrated into a form that acts as the brain of service robots, industrial robots, drones, and autonomous vehicles.

- use: By greatly improving real-time sensor data processing and environmental awareness capabilities, faster and more accurate judgment and control are possible. Robots that learn and adapt on their own and perform tasks without human intervention in complex and unpredictable environments may appear. For example, robots that perform rescue operations by understanding the changing environment in real time at disaster sites, or robots that transport goods by finding the optimal route in a logistics warehouse, etc.

3. Next-generation computing accelerators:

- form: It was developed in the form of an auxiliary processor that is connected to an existing computer system, and can play a role in accelerating specific AI operations.

- use: In tasks that require high-performance computing, such as large-scale data analysis, complex simulations, and deep learning model training, it can dramatically improve computational speed and reduce energy consumption by cooperating with existing CPUs or GPUs. For example, it can be used to perform massive molecular simulations more quickly in the new drug development process or to analyze complex patterns in financial markets in real time.

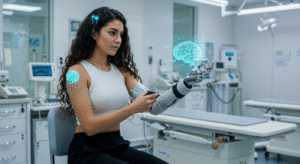

4. Personalized biomimetic interfaces:

- form: Combined with brain-computer interface (BCI) technology, this could allow you to control devices with just your thoughts, or provide more intuitive user interfaces that mimic the way the brain processes signals.

- use: It could be used to help rehabilitate patients with neurological disorders, or to increase immersion in virtual reality (VR) and augmented reality (AR) environments. For example, it could allow paralyzed patients to control robotic arms with just their thoughts, or for interfaces to automatically change based on the user’s emotional state.

5. New forms of data storage and processing:

- form: It could potentially lead to entirely new types of memory devices and computing systems that store and process information in ways that are different from traditional digital memory.

- use: Similar to the associative memory of the human brain, it can perform pattern-based information retrieval and processing very efficiently. This can bring about revolutionary changes in analyzing large amounts of unstructured data and building knowledge graphs.

In this way, neuromorphic computing is a technology that has the potential to permeate our lives in various forms and bring about innovations beyond what we can currently imagine. Although it is still in the early stages of development, it is expected to have a major impact on the future fields of computing and artificial intelligence.

7. Mountains to overcome and challenges ahead: The road ahead until commercialization

Neuromorphic computing holds tremendous potential, but there are still technical and economic challenges to overcome before it can be commercialized.

- Challenges of hardware development: Developing a neuromorphic chip that perfectly mimics the complex structure and function of the human brain is a very difficult technological challenge. Research on new materials, components, and architectures is continuously needed to efficiently implement the number of neurons, connection methods, and signal transmission mechanisms.

- Software and algorithm development: New programming models, algorithms, and software development tools are needed to take full advantage of neuromorphic hardware. Efficient mapping and training of existing deep learning models on neuromorphic chips is also an important area of research.

- Commercialization and Cost Issues: Currently, the cost of producing neuromorphic chips is high, and mass production systems have not yet been established. Cost-effective hardware development and commercialization strategies are essential for applying neuromorphic computing to various industries.

- Integration with existing computing infrastructure: It is also important to study how neuromorphic computing can effectively integrate with existing CPU and GPU-based computing systems to play complementary roles.

8. Conclusion: The era of computers that think like the brain is coming

Neuromorphic computing is a revolutionary computing paradigm inspired by the amazing capabilities of the human brain. Although it is still in its early stages, it has the potential to dramatically improve the performance of artificial intelligence, maximize energy efficiency, and open up new computing possibilities. As neuromorphic computing technology continues to advance and become commercialized, we may witness an amazing future where computers that think and learn like the brain become a reality.

9. Summary

Neuromorphic computing is a next-generation computing technology that mimics the structure and operation of the human brain, and aims to implement highly efficient artificial intelligence through parallel processing and event-based operations. It has overcome the limitations of the existing von Neumann architecture and has shown innovative potential in various fields such as real-time data processing and autonomous learning. Major companies such as IBM and Intel are actively conducting research and development, but there are still issues to be resolved in hardware, software, and commercialization. Nevertheless, neuromorphic computing is attracting attention as an important direction for future artificial intelligence and computing technology, and suggests the possibility of an era of computers that think like the brain.

*An article to help you understand quantum computers easily Go see